Taking Civic Action with Zines

In this free webinar, join the National Museum of American History and learn new ways to civically engage your students! You’ll leave with resources and strategies explore museum objects, build art skills, create zines, and encourage your students to engage in civic action.

Everything Under the Sun*

*Well...maybe not everything... but the Smithsonian Learning Lab puts the treasures of the world's largest museum, education, and research complex within reach. The Lab is a free, interactive platform for discovering millions of authentic digital resources, creating content with online tools, and sharing in the Smithsonian's expansive community of knowledge and learning.

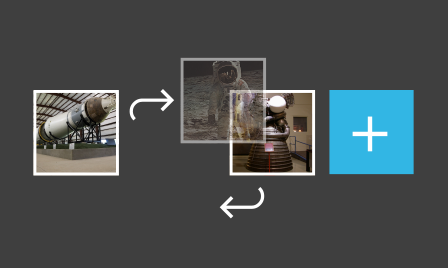

Discover

What will you find in the Smithsonian Learning Lab?

Millions of Smithsonian digital images, recordings, texts, and videos in history, art and culture, and the sciences

Thousands of examples of resources organized and structured for teaching and learning by educators and subject experts

Millions of Smithsonian digital images, recordings, texts, and videos in history, art and culture, and the sciences

Thousands of examples of resources organized and structured for teaching and learning by educators and subject experts

Who uses the Learning Lab?

In short... it's people like you. We created the Learning Lab so anyone can quickly discover and adapt authoritative subject-relevant resources.

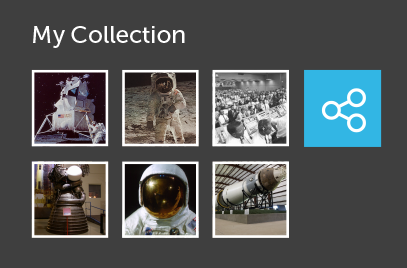

Browse individual resources by type

Browse model collections on different topics

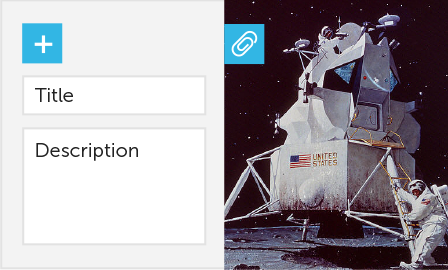

Create

Create collections of resources to engage learners, or freely adapt ones already made by Learning Lab users like you.

Use Learning Lab tools to customize and enhance your collections.

Copy a Collection

Adapt exemplars to personalize them for your learners.

Upload

Upload aids and resources you already use and value.

Annotations and Hotspots

Make a collection student-friendly, with information, focus, and interactivity.

Quizzes and Assignments

Build in guidance and supports for students.

Browse ready-to-use model collections by educational use

Browse ready-to-use model collections by learning strategy or theme

Learn

Grow your skills and stay connected with the

Learning Lab Community

Grow your skills and stay connected with the Learning Lab Community

News and Teaching Tips

12/08/23

Hands-on Making ActivitiesProfessional Development and Events

Distance Learning with the Smithsonian

The Smithsonian is committed to supporting the distance learning needs of educators and students.

Visit our Distance Learning Page for resources, trainings, and a full calendar of live events hosted by experts from across the Smithsonian.

Recent Events

March 2024

Taking Civic Action with ZinesJoin Eden Cho of the National Museum of American History to explore how you can incorporate museum objects and art skills to civically engage your students! You’ll leave with resources and strategies to incorporate museum objects in your classroom, create zines, and encourage your students to engage in civic action.

Create a Free Smithsonian Learning Lab Account